What’s Inside the Black Box?

Why It’s Time to Question How Your AI Makes Decisions

AI is everywhere. It translates your documents, drafts your emails, maybe even helps you write your product descriptions.

But here’s the uncomfortable truth: Most people – and most companies – have no idea how their AI actually works.

That’s because most AI systems are what the industry politely calls “black boxes”. You feed them data. You get a result. But the logic in between? Opaque, proprietary, or too complex to interpret – even for the people who built it. In day-to-day life, you might accept that trade-off. In enterprise environments – where trust, compliance, and intellectual property are on the line – you shouldn’t. At Exfluency, we think it’s time to stop treating AI like magic. It’s time to open the box.

What Is a Black-Box Model?

A black-box model is an AI system that produces outputs without offering a clear explanation of how those outputs were generated.

In technical terms: it’s an algorithm you can’t meaningfully interrogate. In practical terms: it’s a liability.

It’s like asking a stranger to write your investor report in a language you don’t speak – and not being allowed to ask where they got their information.

The Risks Are Real and Rising

The use of black-box AI in language workflows is growing fast. So are the consequences.

When organisations plug enterprise data into systems they don’t control, they’re exposed to risks that include:

Bias and distortion

AI can embed cultural, political, or factual bias that’s hard to detect – until it’s too late.

Data leakage

Inputs and outputs may be stored, logged, or used to train external models. Your internal strategy documents or proprietary content could become part of someone else’s language model.

Curious how Exfluency protects your data?

Loss of voice

You can’t enforce your terminology, tone, or regional preferences. Your brand starts to sound like everyone else’s – or worse, like no one at all.

Regulatory uncertainty

You can’t prove how or where decisions were made. That’s a growing problem in regulated industries like healthcare, finance, and government.

It’s not just about quality anymore. It’s about control.

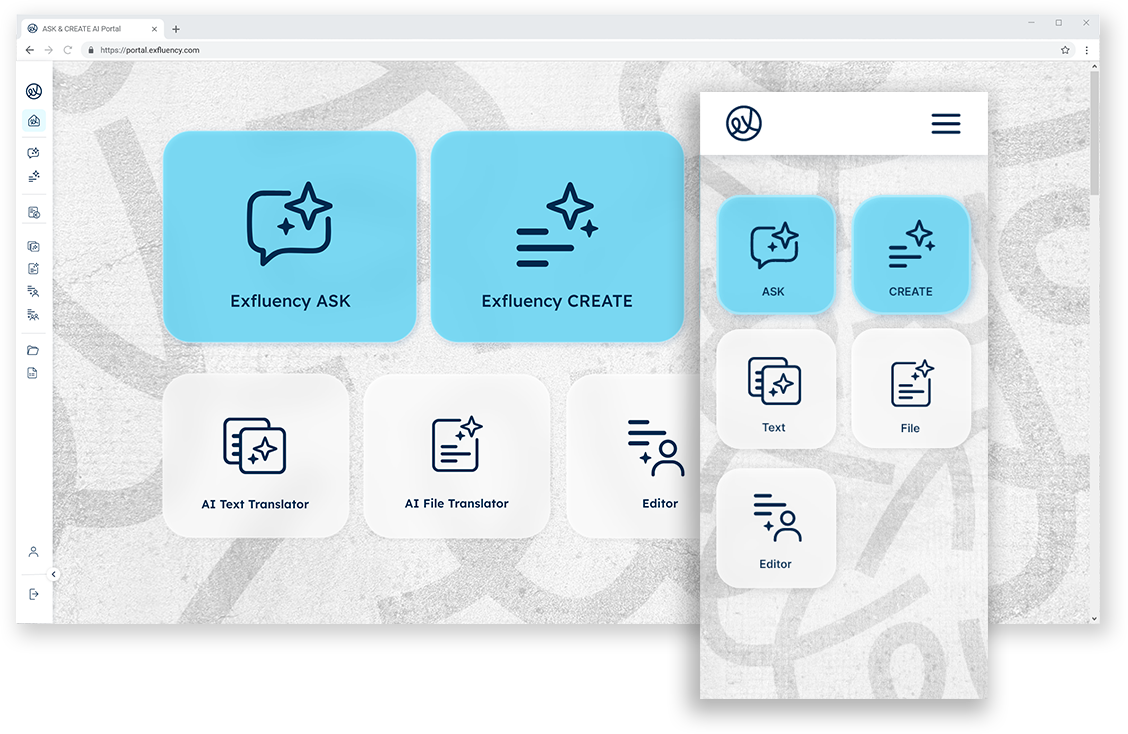

See it in action:

We’ll show you how to build AI-powered language workflows that respect your data, your voice, and your business.

Exfluency’s Answer: Own the Process

“We’re building middleware that only uses a language model to formulate answers – it never takes your data with it.”

– Robert Etches, CEO of Exfluency

At Exfluency, we don’t believe in mystery. We believe in transparency, traceability, and choice.

That’s why we’ve designed our platform from the ground up to reject the black-box approach.

Here’s how:

AI Agnosticism

You choose the model. Llama. Mistral. Qwen. ChatGPT. DeepSeek. You can switch at any time – because lock-in is just another form of opacity.

Data Sovereignty

Your content doesn’t feed external models. Our middleware ensures data stays within your ecosystem. That means your knowledge remains yours.

Explainable Workflows

Every step is logged – from machine translation to human post-editing – so you can see exactly how a final version came to be.

Modular Design

Our architecture allows your IT and compliance teams to define boundaries, set usage policies, and stay in control. No hidden pipelines. No shadow data transfers.

AI That Can’t Be Explained Can’t Be Trusted

As enterprises deepen their reliance on generative AI, they’re starting to realise what’s at stake.

The tools you use today shape your operational resilience tomorrow. If your AI provider can’t – or won’t –

show you what happens under the hood, then it’s not your AI. It’s theirs.

And if it’s theirs, so is your data.

At Exfluency, we’re building for a different future – one where language workflows are secure, explainable,

and designed with your autonomy in mind.

Because your voice matters.

Your data matters.

And language deserves better than a black box.

Ask the Hard Question

We get it. Black-box AI is easy. It’s fast. It’s everywhere. But if your business relies on accurate, secure, and

explainable multilingual communication, you need to ask one simple question before you plug in the next tool:

“Can I see what’s inside the box?”

If the answer is no – walk away.

If the answer is yes – then it’s time we talked.

Don’t settle for black-box AI.

Let us show you what transparent, secure, and scalable language solutions actually look like.